Home Assistant & Volvo

Here’s a neat little trick for those of you using Home Assistant while also driving a Volvo.

To get your Volvo driving data (fuel level, battery state, …) into Home Assistant, there’s the excellent volvo2mqtt addon.

One little annoyance is that every time it starts up, you will receive an e-mail from Volvo with a two-factor authentication code, which you then have to enter in Home Assistant.

Fortunately, there’s a solution for that, you can automate this using the built-in imap support of Home Assistant, with an automation such as this one:

alias: Volvo OTP

description: ""

trigger:

- platform: event

event_type: imap_content

event_data:

initial: true

sender: [email protected]

subject: Your Volvo ID Verification code

condition: []

action:

- service: mqtt.publish

metadata: {}

data:

topic: volvoAAOS2mqtt/otp_code

payload: >-

{{ trigger.event.data['text'] | regex_findall_index(find='Your Volvo ID verification code is:\s+(\d+)', index=0) }}

- service: imap.delete

data:

entry: "{{ trigger.event.data['entry_id'] }}"

uid: "{{ trigger.event.data['uid'] }}"

mode: singleThis will post the OTP code to the right location and then delete the message from your inbox (if you’re using Google Mail, that means archiving it).

CommentsRecent tech reading

So much things going on these days, it’s already shaping up to be a pretty crazy year, in the good sense. Pretty much as I predicted at the start of the year, though it must be said that 2020 didn’t exactly raise the bar much. Pretty easy to clear that hurdle.

But that’s for another day. For now, here’s some interesting things I’ve been reading recently, in no particular order / theme:

Modules, monoliths, and microservices

Pretty common sense way of looking at this whole discussion. I’ve seen both ends of the spectrum and as always the right answer is: it depends. Inform yourself and choose wisely.

There certainly isn’t a solution that works for everyone, in every situation.

You need to be able to run your system

So much truth in this one. It requires a bit of investment, but it’s one of those things that act as a force multiplier: it speeds up developers, giving you faster development, more head-space to build a solid product and more time to focus on what actually matters.

Just consider the inverse: if you make their day jobs as cumbersome and frustrating as possible, how do you expect your development team to perform?

Any project I’ve helped roll this way of working out has benefited massively, so I recommend it each and every time. Talk to me if you need help with this.

Breaking down and fixing Kubernetes

As an ops person, I’m a big fan of these kind of fire drills, where you deliberatly damage a system and then try to fix it. Doing this as an exercise, when things aren’t on fire, gives you so much more confidence when things do break down for real.

Comments2021

And suddenly, before you notice it, the year has passed. And what a year it has been…

It’s easy to brush 2020 off as a year to quickly forget, given the pandemic we suddenly find ourselves in. But I’d rather not. Looking back, despite everthing we took for granted but currently can no longer do, it’s been a year full of great experiences, new friends, new business, launching things and lots of joy with the family.

I for one am very optimistic and excited what 2021 brings in terms of plot twists. You can’t always predict what will come, but flexibility goes a long way. Onwards and upwards!

CommentsAngular: ng not watching for changes

Running Linux and noticed that ng serve or ng test don’t observe changes

and thus won’t reload/test automatically?

This might be caused by simply having too many files in your tree, causing your system to hit an internal limit. This limit can be raised using the following command:

echo 524288 | sudo tee /proc/sys/fs/inotify/max_user_watches

You’ll have to do this after every boot. Want it persistently? Use the following:

echo fs.inotify.max_user_watches=524288 | sudo tee /etc/sysctl.d/40-max-user-watches.conf

sudo sysctl --system

Rust: First Impressions

I’ve been studying the Rust Programming Language over the holidays, here are some of my first impressions. My main interest in Rust is compiling performance-critical code to WebAssembly for usage in the browser.

Rust is an ambitious language: it tries to eliminate broad categories of browser errors by detecting them during compilation. This requires more help from the programmer: reasoning about what a program does exactly is famously impossible (the halting problem), but that doesn’t mean we can’t think about some aspects, provided that we give the compiler the right inputs. Memory management is the big thing in Rust where this applies. By indicating where a value is owned and where it is only temporarily borrowed, the compiler is able to infer the life-cycle of values. Similar things apply for type safety, handling errors, multi-threading and preventing null references.

All very cool off-course, but nothing in life is for free: it requires a much higher level of precise input with regards to what exactly you’re trying to achieve. So programming in Rust is less careless than other languages, but the end result is guaranteed correctness. I’d say that’s worth it.

This very strict mode of compilation also means that the compiler is very picky about what it accepts. You can expect many error messages and much fighting (initially) to even get your program to compile. The error messages are very good though, so usually (but not always) they give a pretty good indication of what to fix. And once it compiles you’re rather certain that the result is good.

Another consequence is that Rust is by no means a small language. Compared to the rather succinct Go, there’s an enormous amount of concepts and syntax. All needed, but it certainly doesn’t make things easier to read.

Other random thoughts:

- It’s a mistake to see a reference as a pointer. They’re not the same thing, but it’s very easy to confuse them while learning Rust. Thinking about moving ownership takes some adaptation.

- Lifetimes are hard and confusing at first. This is one of the points where I feel you spend more attention to getting the language right than the actual functionality of your code.

- Rust has the same composable IO abstractions (Read/Write) as in the Go io package. These are excellent and a joy to work with.

- My main worry is the complexity of the language: each new corner-case of

correctness will lead to the addition of more language complexity. Have we

reached the end or will things keep getting harder? One example of where the

model already feels like it’s reaching the limits is

RefCell.

In all, I’d say Rust is a good addition to the toolbox, for places where it makes sense. But I don’t foresee it replacing Go yet as my go-to language on the backend. It all comes down to the situation, finding the right balance between the need for performance/correctness and productivity: the right tool for the job. To be continued.

CommentsGo: io.Reader gotchas

I’ve really come to appreciate the elegance in the io

abstractions in Go. The seemingly simple patterns of

io.Reader and io.Writer open up a world of easily composable data

pipelines.

Need to add compression? Just wrap the Writer with a gzip.Writer, etc.

But there are some subtleties to be aware off, that might bite you.

Let’s have a look at the description of io.Reader.Read():

Read(p []byte) (n int, err error)Read reads up to len(p) bytes into p. It returns the number of bytes read (0 <= n <= len(p)) and any error encountered. Even if Read returns n < len(p), it may use all of p as scratch space during the call. If some data is available but not len(p) bytes, Read conventionally returns what is available instead of waiting for more.

This is fairly straightforward. You call Read() with a byte slice, which it

may fill up. The key point here being may. Most IO sources (e.g. a file) will

generally read the full buffer, until you reach the end of the file.

But not all of them. For instance, a gzip.Writer tends to do incomplete

reads, requiring multiple Read() calls.

Recommendation: If you need to read a buffer in full, use

io.ReadFull() instead of Read().

When Read encounters an error or end-of-file condition after successfully reading n > 0 bytes, it returns the number of bytes read. It may return the (non-nil) error from the same call or return the error (and n == 0) from a subsequent call. An instance of this general case is that a Reader returning a non-zero number of bytes at the end of the input stream may return either err == EOF or err == nil. The next Read should return 0, EOF.

Callers should always process the n > 0 bytes returned before considering the error err. Doing so correctly handles I/O errors that happen after reading some bytes and also both of the allowed EOF behaviors.

This means it’s perfectly legal to return both n (and thus read a number of

bytes) and an error at the same time.

It also means that the standard pattern of immediately checking for an error is wrong:

// Don't do this

n, err := in.Read(buf)

if err != nil {

// Handle err

}

// Do something with n and bufAlways process n / buf first, then check for the presence of an error.

Implementations of Read are discouraged from returning a zero byte count with a nil error, except when len(p) == 0. Callers should treat a return of 0 and nil as indicating that nothing happened; in particular it does not indicate EOF.

The important take-away here: always check for err == io.EOF, some

implementations might give you an empty read even if there is still data to

come.

Running into either of these corner cases is generally rare, since most IO sources are quite well-behaved. But being aware of the corner cases will save you a massive amount of debugging once you do run into them.

CommentsGo: JSON and broken APIs

If you’ve ever used Go to decode the JSON response returned by a PHP API, you’ll probably have ran into this error:

json: cannot unmarshal array into Go struct field Obj.field of type map[string]string

The problem here being that PHP, rather than returning the empty object you

expected ({}), returns an empty array ([]). Not completely unexpected: in

PHP there’s no difference between maps/objects and arrays.

Sometimes you can fix the server:

return (object)$mything;This ensures that an empty $mything becomes {}.

But that’s not always possible, you might have to work around it on the client. With Go, it’s not all that hard.

First, define a custom type for your object:

type MyObj struct {

...

Field map[string]string `json:"field"`

...

}Becomes:

type MyField map[string]string

type MyObj struct {

...

Field MyField `json:"field"`

...

}Then implement the Unmarshaler interface:

func (t *MyField) UnmarshalJSON(in []byte) error {

if bytes.Equal(in, []byte("[]")) {

return nil

}

m := (*map[string]string)(t)

return json.Unmarshal(in, m)

}And that’s it! JSON deserialization will now gracefully ignore empty arrays returned by PHP.

Some things of note:

- The method is defined on a pointer receiver (

*MyField). This is needed to correctly update the underlying map. - I’m casting the

tobject tomap[string]string. This avoids infinite recursion when we later calljson.Unmarshal().

Retro Operations

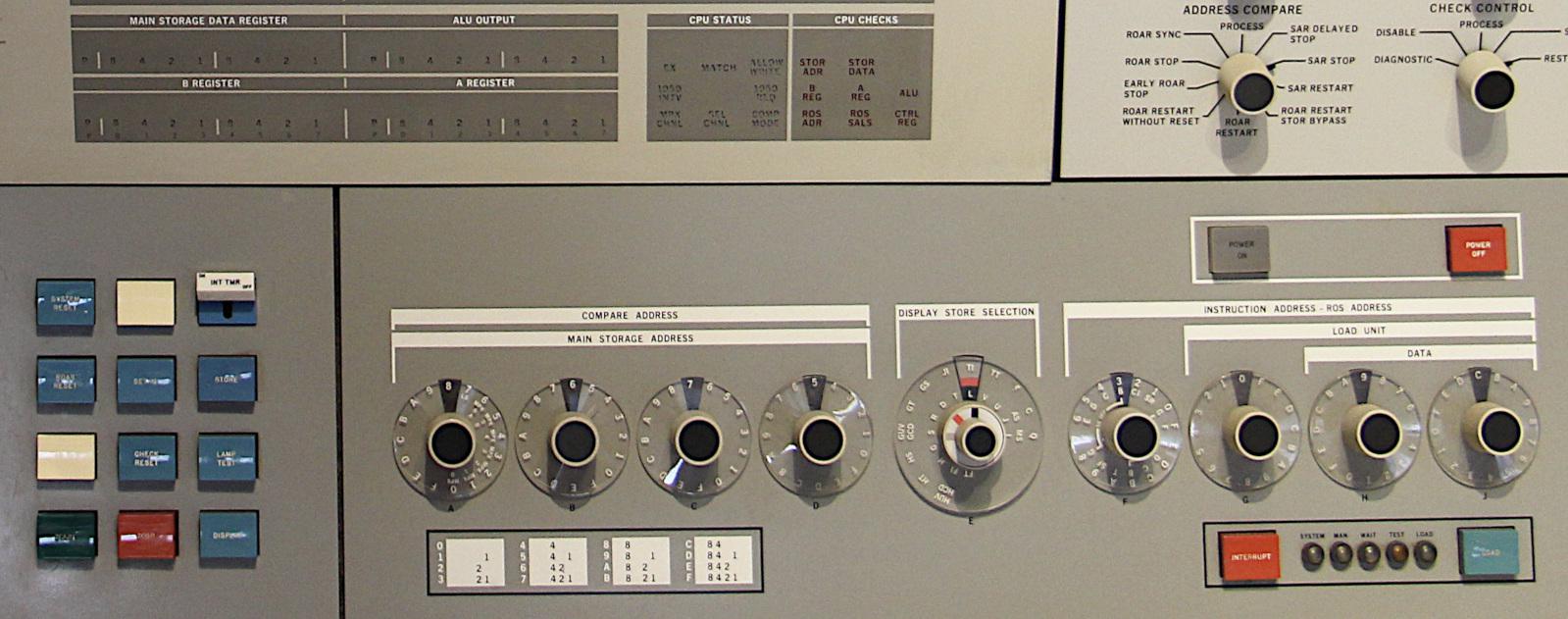

In his post Iconic consoles of the IBM System/360 mainframes, 55 years old, Ken Shirrif gives a beautiful overview of how IBM mainframes were operated.

I particularly liked this bit:

The second console function was “operator intervention”: program debugging tasks such as examining and modifying memory or registers and setting breakpoints. The Model 30 console controls below were used for operator intervention. To display memory contents, the operator selected an address with the four hexadecimal dials on the left and pushed the Display button, displaying data on the lights above the dials. To modify memory, the operator entered a byte using the two hex dials on the far right and pushed the Store button. (Although the Model 30 had a 32-bit architecture, it operated on one byte at a time, trading off speed for lower cost.) The Address Compare knob in the upper right set a breakpoint.

Debugging a program was built right into the hardware, to be performed at the console of the machine. Considering the fact that these machines were usually placed in rooms optimized for the machine rather than the human, that must have been a difficult job. Think about that the next time you’re poking at a Kubernetes cluster using your laptop, in the comfort of your home.

Also recommended is the book Core Memory: A Visual Survey of Vintage Computers. It really shows the intricate beauty of some of the earlier computers. It also shows how incredibly cumbersome these machines must have been to handle.

Even when you’re in IT operations, it’s getting more and more rare to see actual hardware and that’s probably a good thing. It never hurts to look at history to get a taste of how far we’ve come. Life in operations has never been more comfortable: let’s enjoy it by celebrating the past!

CommentsNew beginnings

A couple of weeks ago our first-born daughter appeared into my life. All the clichés of what this miracle does with a man are very well true. Not only is this (quite literally) the start of a new life, it also gives you a pause to reflect on your own life.

Around the same time I’ve finished working on the project that has occupied most of my time over the past years: helping a software-as-a-service company completely modernize and rearchitect their software stack, to help it grow further in the coming decade.

Going forward, I’ll be applying the valuable lessons learned while doing this, combined with all my previous experiences, as a consultant. More specifically I’ll be focusing on DevOps and related concerns. More information on that can be found on this page.

I also have a new business venture in the works, but that’s the subject of a future post.

CommentsLet's talk about the developer experience

Yesterday, at the AWS User Group Belgium Meetup I presented a short lightning talk. It was a call to action for the fact that operations people should pay more attention to the developer experience.

Annotated slides of the talk can be found here.

This is an important subject to me: how can we make sure developers stay productive in the ever more complex environment of the cloud.

Photo: Nils De Moor

Photo: Nils De Moor