Deploying Node.js with systemd

On Jan 16, 2013, I gave a talk on deploying Node.js with systemd, at the Belgian node.js User Group. Below is an annotated version of my slides.

You can also download a PDF (without notes), if you prefer that instead.

A short introduction

This talk is about how we deploy Node.js. Using one of the widely available deployment platforms (such as Heroku, NodeJitsu or the Joyent Cloud) gives you access to a highly advanced and modern platform in a heartbeat. I’ll show you how you can get some of that magic too, if you prefer to run on your own infrastructure.

Short version: I’ve done a bit of everything. The longer version can be read on the about page.

Update (Dec 3, 2013): I’m no longer involved with Flow Pilots and am currently available for contracting, if the project is interesting. If you need help with the things on this page, send me a mail.

Flow Pilots is actually two businesses in one:

- Built-to-measure mobile applications for enterprise customers, exactly designed around the client needs.

- Products, intended to be reused at scale.

Each of these requires backends and for that we use Node.js.

We’ll have a look at how we deploy Node.js at Flow Pilots. You’ll see that it’s actually not that hard to get a modern runtime environment for Node.js set up. All of this applies to Linux. It is equally applicable to having your own server or when you run it somewhere in the cloud (Amazon EC2, Linode, …).

Setting up Node.js on your server

Deploying Node.js is somewhat complex. Before we dive into that, let’s have a look at our favourite programming language:

Obviously, that’s PHP ;-). Well it used to be what we wrote. A day in the life of a PHP developer is rather simple: write code, upload, reload. No further steps needed. Same thing applies for system administrators: install the PHP module and you’re done. No need to touch the server again.

Obviously, that’s PHP ;-). Well it used to be what we wrote. A day in the life of a PHP developer is rather simple: write code, upload, reload. No further steps needed. Same thing applies for system administrators: install the PHP module and you’re done. No need to touch the server again.

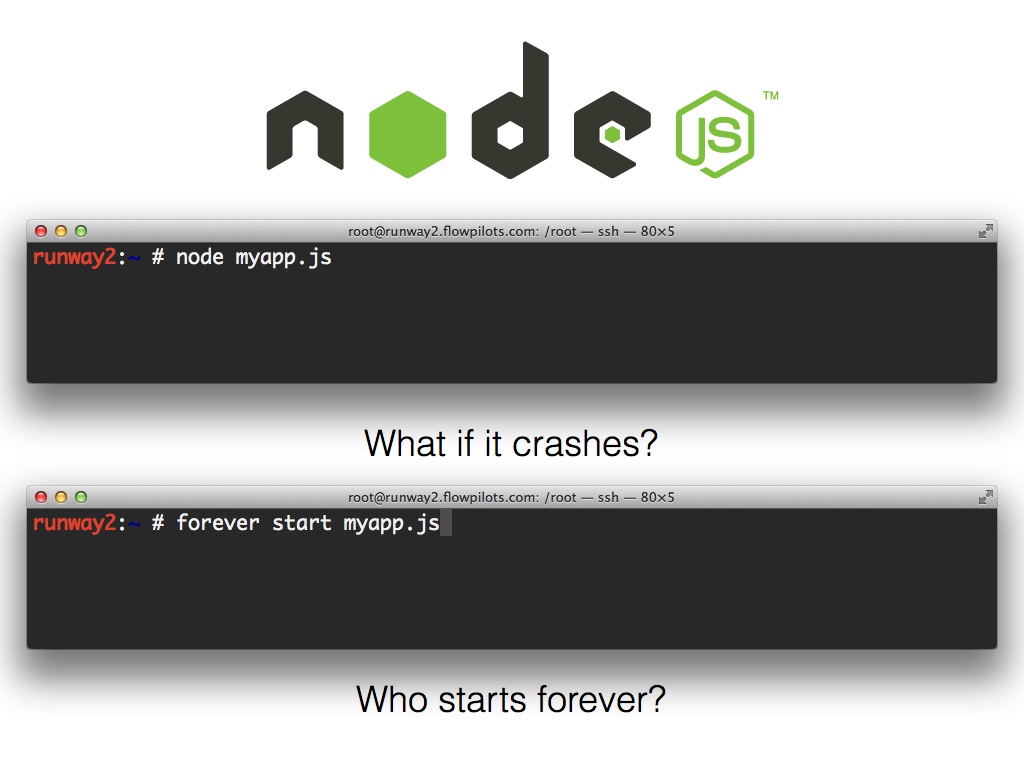

Node.js is a different story, it actually requires you to have a perpetually-running Node process. This means you have to: a) start it and b) make sure it keeps running. The upside is that we can do advanced things like websockets or long-running tasks. It’s one of the reasons people love Node.js.

Simply starting node on your server is a recipe for disaster. If it crashes (and at some point it will, no code is perfect), your service will be wiped of the internet.

You can use a tool like mon (written by TJ Holowaychuk) or forever. This will respawn your service when needed.

But what happens when your server reboots? Who starts the process monitor?

Turns out every Linux distribution has this functionality built-in: the init system. We decided to cut out the middle-man: no mon, no forever. We’ll let our process manager take care of launching node.

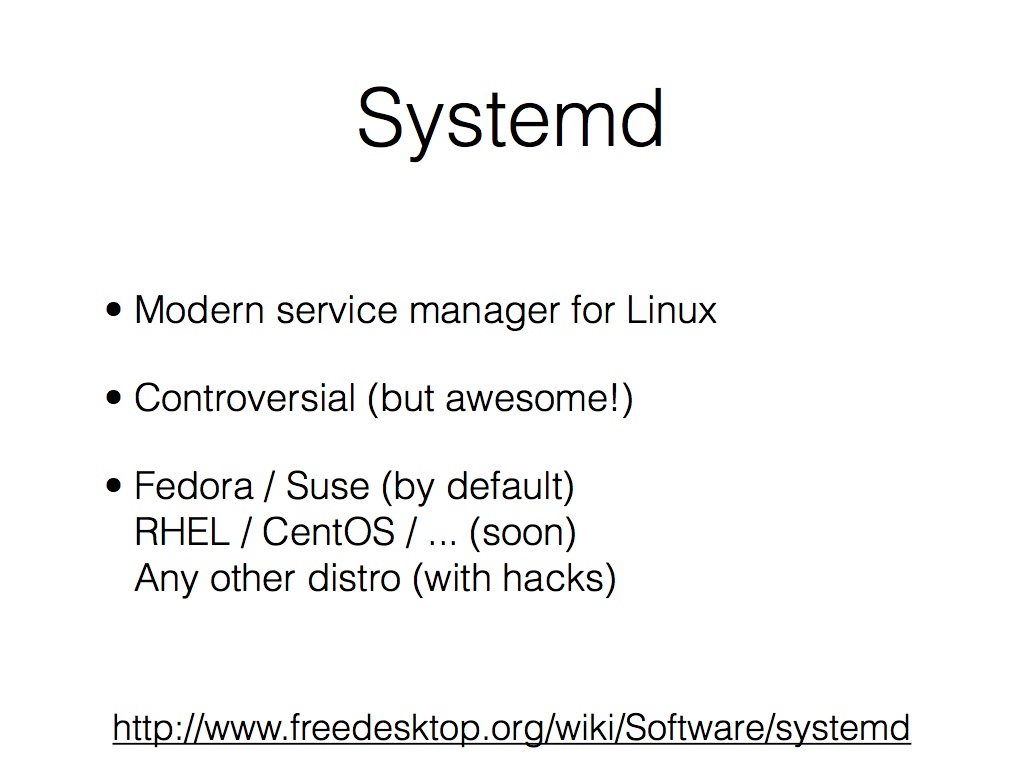

For our distribution (Fedora), that’s systemd. Systemd is a modern service manager for Linux: it throws away the legacy of sysvinit and brings Linux to the forefront in terms of process management. It’s also surrounded with controversy, mostly because a lot of people in the open-source community are afraid of change. We don’t let that bother us though: there’s so much awesome in systemd that you’d be foolish not to take advantage of it.

You can also get it on pretty much any distribution, though your mileage may vary.

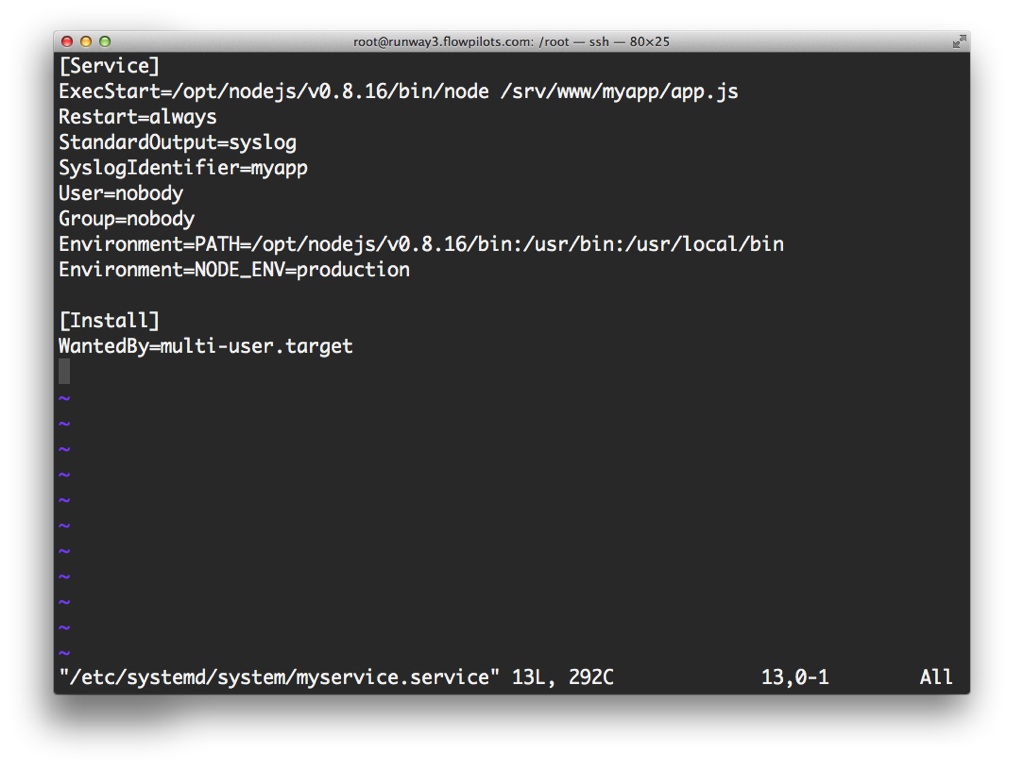

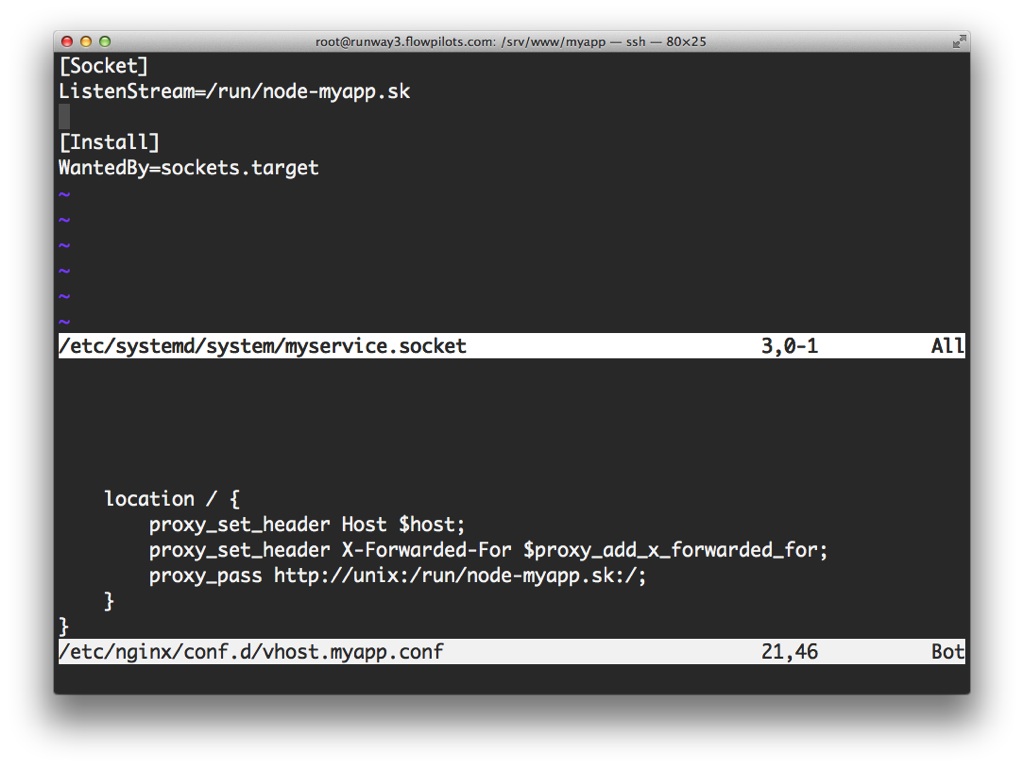

Let’s have a look at how you start and monitor a Node.js daemon with systemd. First, you’ll need to write a systemd unit file, which is a definition of your service:

The first two directives are key: ExecStart defines what should be run (we include the full path to node because we run multiple versions in parallel), Restart defines the policy for this service. In this case: make sure it is always running.

The other lines in the Service block control logging, permissions and environment variables. I recommend you add a NODE_ENV variable with a value of production to all your deployed apps. This triggers things like caching inside express.js. We’ll also use it below.

The Install section defines how and when this unit file should be activated. In this case: whenever the system has booted up.

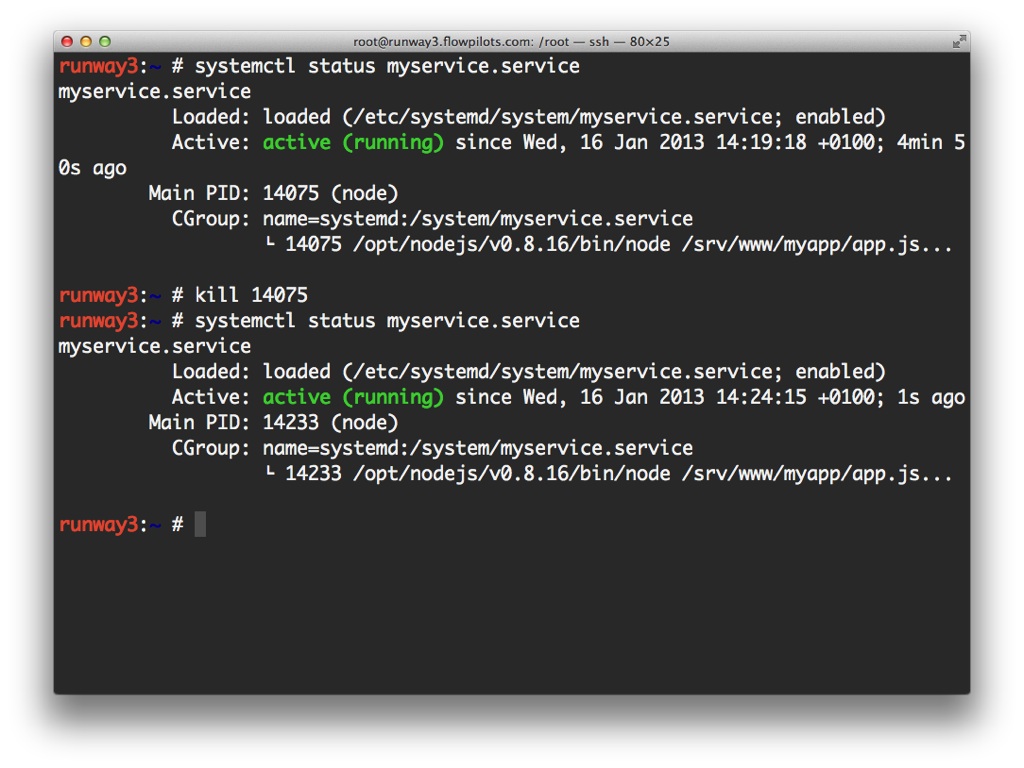

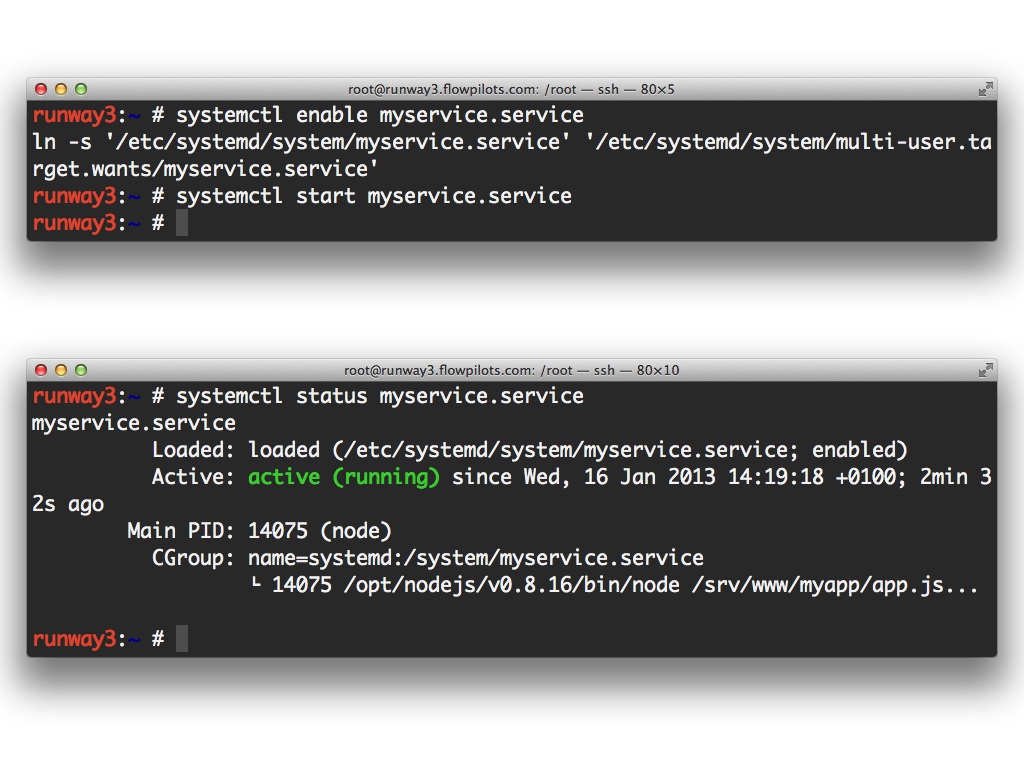

Enable and start the service to get it running:

You’ll only need to start it the first time, if you reboot your machine, systemd will take care of it (that’s why we had the install directive). You can verify that it’s running with the status command. If you kill the service, it will be restarted by systemd.

You’ll only need to start it the first time, if you reboot your machine, systemd will take care of it (that’s why we had the install directive). You can verify that it’s running with the status command. If you kill the service, it will be restarted by systemd.

Notice how the PID (process ID) changes.

There you have it. Systemd is now running and monitoring our node daemon. That’s just he start though. There are much nicer things we can do.

Socket Activation

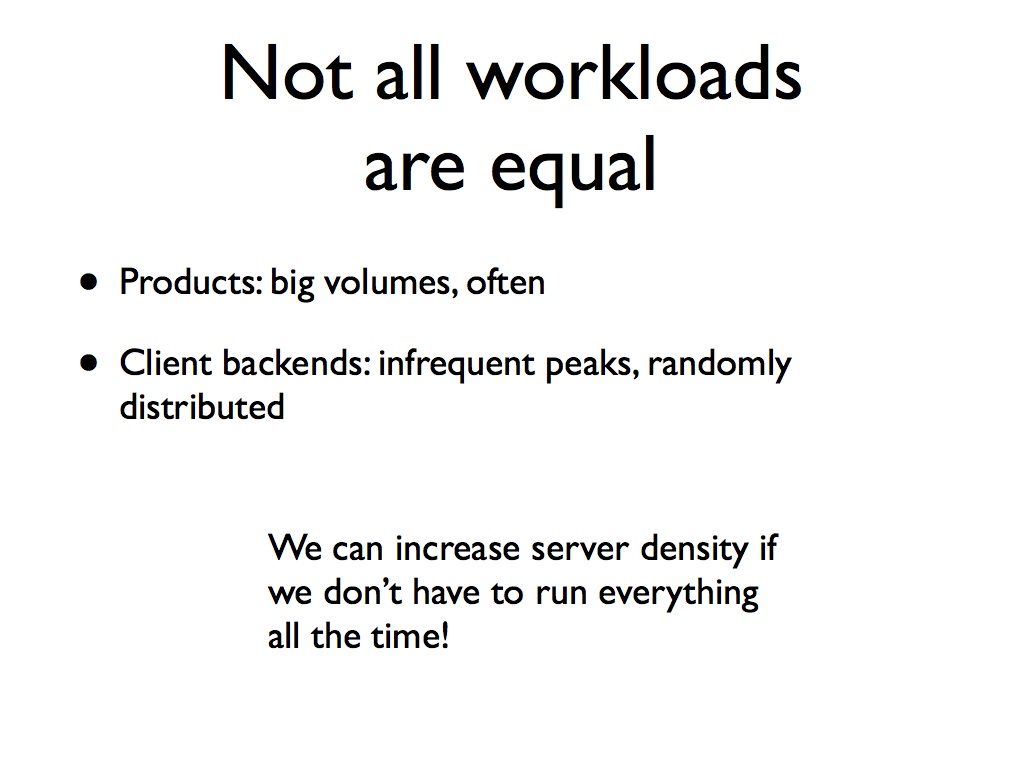

Remember how we have two different types of applications at Flow Pilots? The product backends, they’re used pretty much all the time.

But for the client backends, it’s a very different story: some apps (and backends) are only used during specific events, which may only amount to a couple of days per year. The rest of the time, we might as well just shut them off.

That’s exactly what we’ll be doing. By only running the services that are actually in use, you can drastically increase the server density.

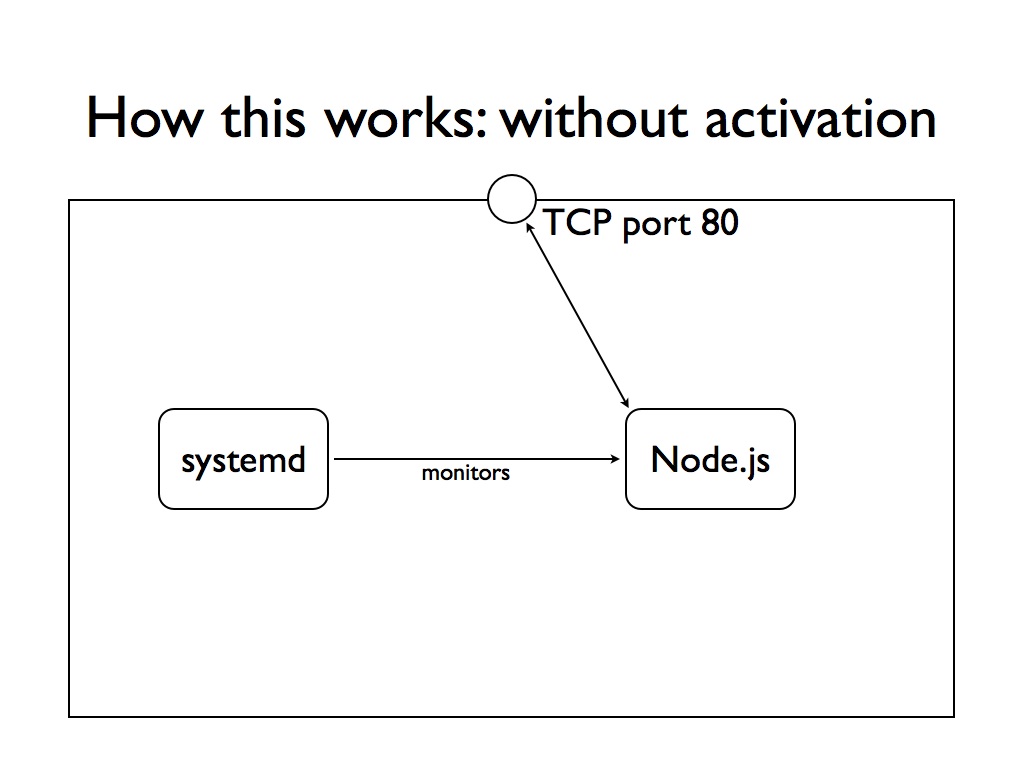

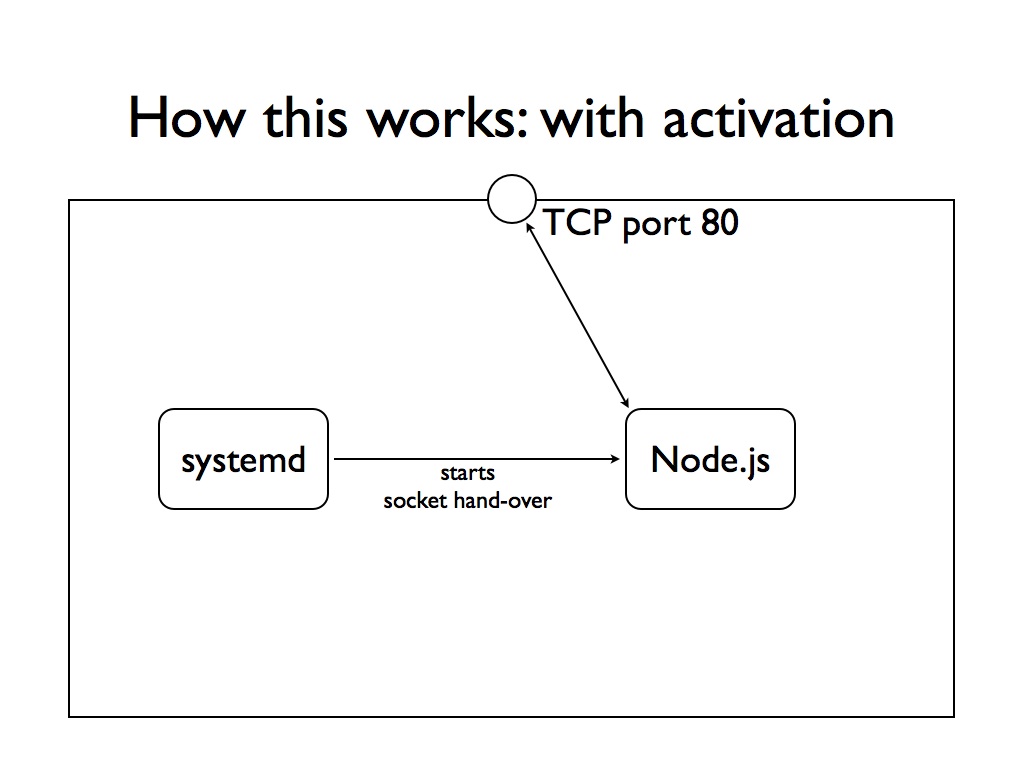

Without socket activation, the mental picture looks somewhat like the diagram above. Node.js listens on the TCP port and serves requests. Systemd monitors Node.js and restarts it when needed.

Let’s change this.

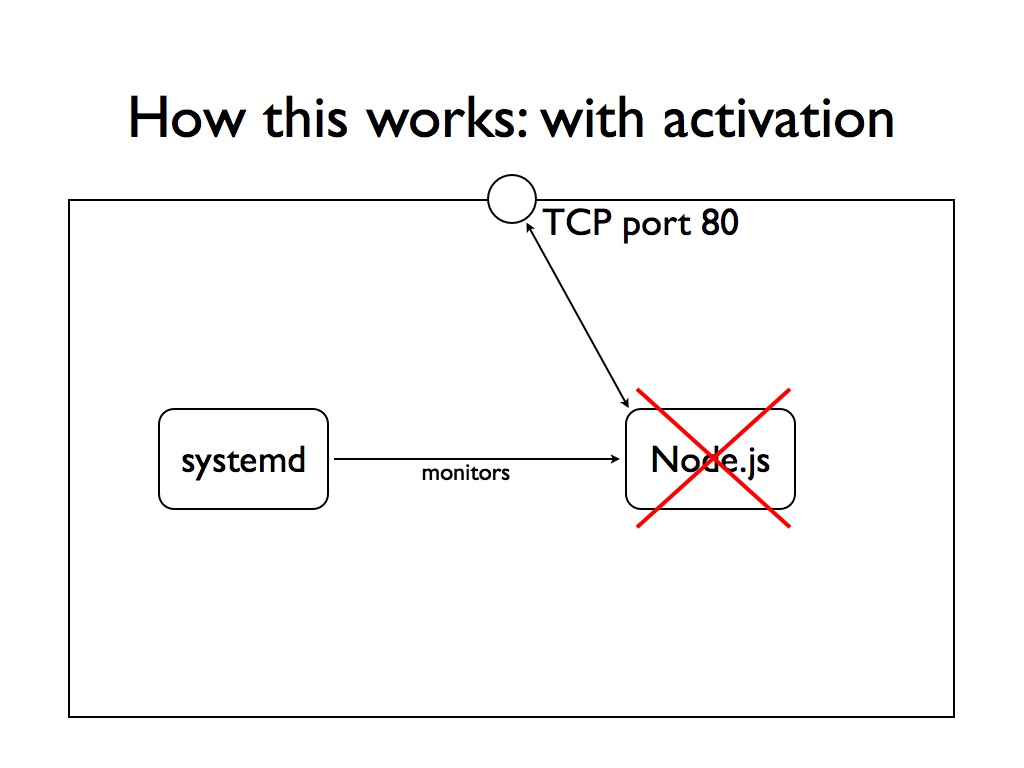

First, we’ll stop running Node.js.

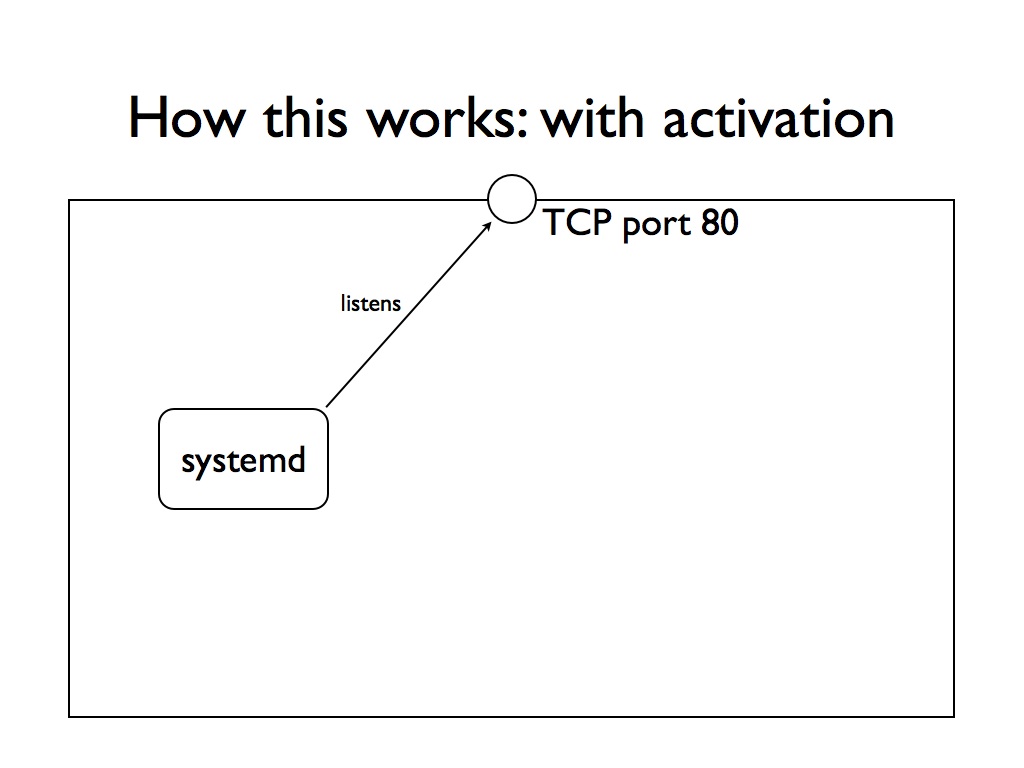

Instead, we’ll configure systemd to monitor the TCP port:

When a request comes in, systemd will spawn Node.js and hand over the socket. All of this happens transparently to the client: it doesn’t know it is happening. From then on, Node.js handles all requests. Systemd goes back to the role of monitoring Node.js.

When Node.js is done, we’ll have it shut down automatically. The monitoring of the TCP port will be picked up again by systemd and the cycle can start again.

That was the theory. Let’s do that. We’ll be tweaking a simple app to support socket activation.

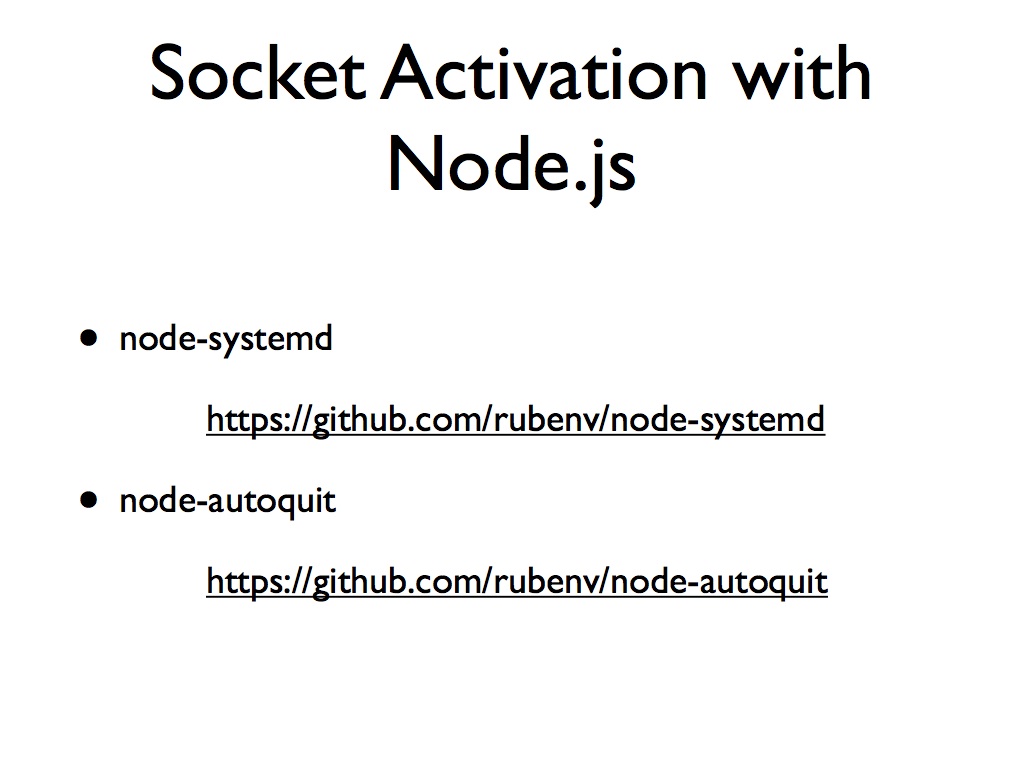

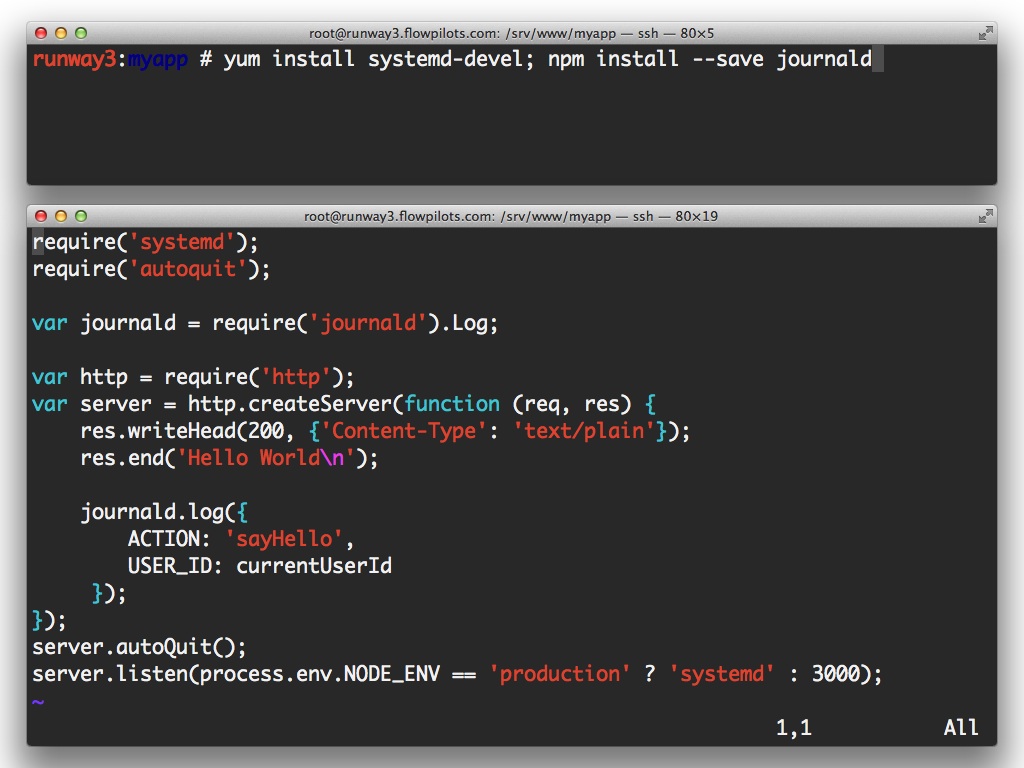

You’ll need three modules, both available on github and through NPM:

- node-systemd: takes care of handing over activated sockets

- node-autoquit: shuts down Node.js when idle

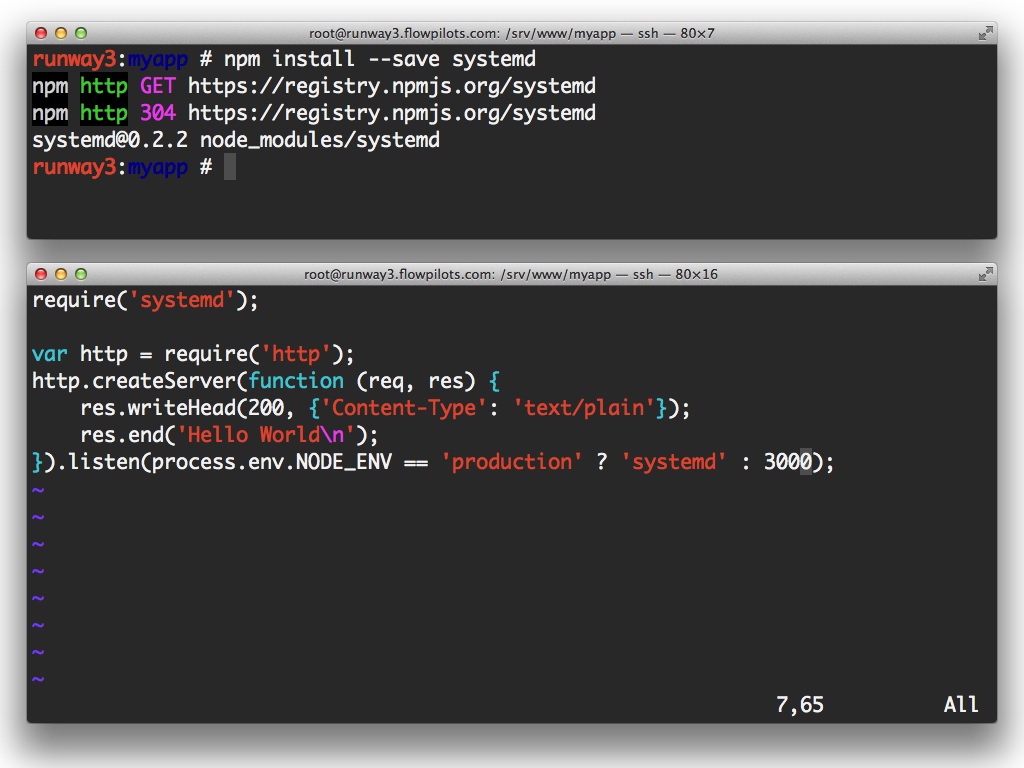

Step 1: Add node-systemd

- Install the systemd module. The awesome

--saveparameter automatically adds the dependency to yourpackage.jsonfile. - Change the listen call: substitute the numerical port argument by the string

systemd. Or as we like to do it: make that conditional on the availability of an environment variable. That way you’ll still be able to run it stand-alone.

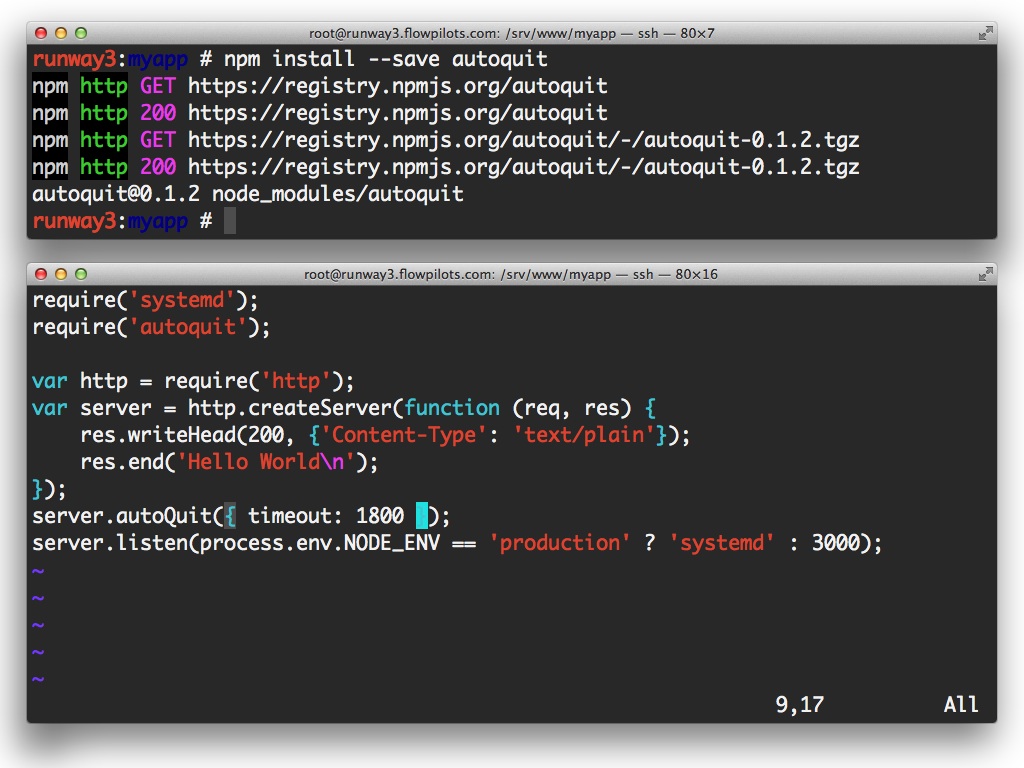

Step 2: add node-autoquit

Very similar:

- Install the autoquit module

- Call

server.autoQuit(). You can optionally specify a time-out (in seconds). The example above will quit Node.js when it’s been idle for half an hour. We actually use values that are much lower: 5 minutes and we recommend that keep this low.

Now you’ll have a Node.js app that quits when it’s been idle for a couple of minutes.

Meanwhile, I can hear you thinking:

Wait a minute, kill my daemon all the time?

That’s just crazy! What about all the state?

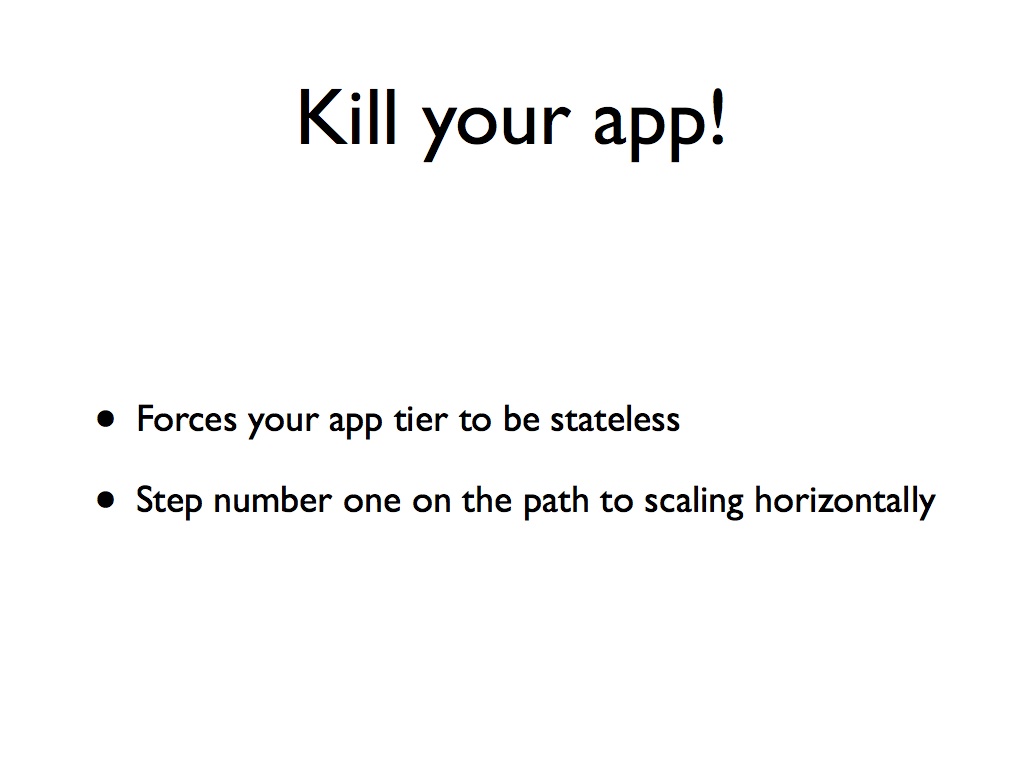

You should really kill your backend at all times. This forces you to keep state out of it. Keep sessions in Mongo, Redis or memcache. Keep all important state out of the app tier.

That’s the first step towards scaling horizontally.

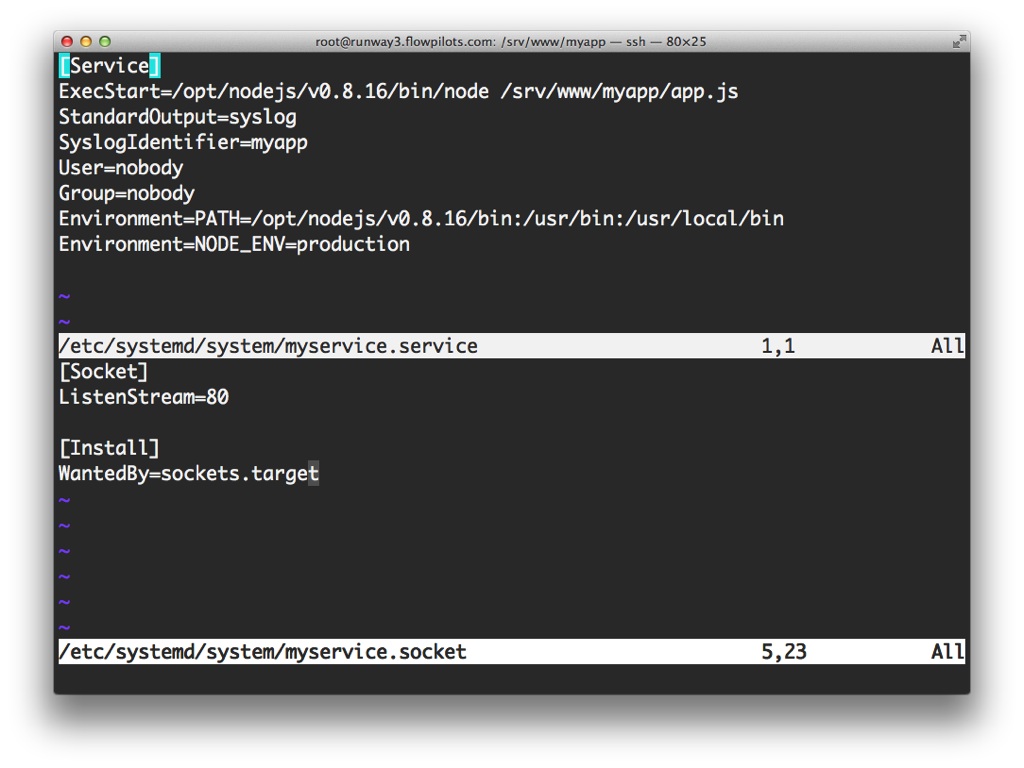

Step 3: Configuring systemd

Split up the service file.

- Remove the

Restartdirective. We don’t want that anymore. - Also remove the

Installsection. We don’t want it started automatically - Add a socket unit file. This one defines what port systemd should listen on. It matches it to the service by looking at the unit name.

I recommend you don’t listen to TCP ports directly. Put an nginx reverse proxy in front of Node.js and communicate via Unix sockets (that’s supported in node-systemd).

You can then let nginx take care of serving static files, terminating SSL and taking care of gzip compression.

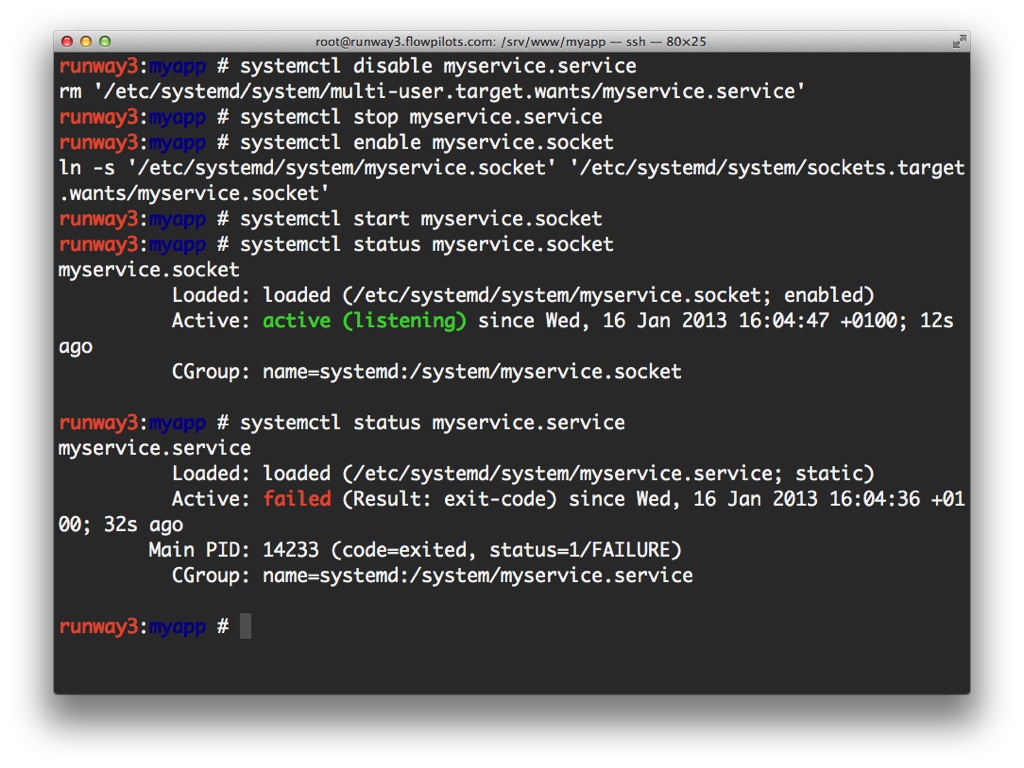

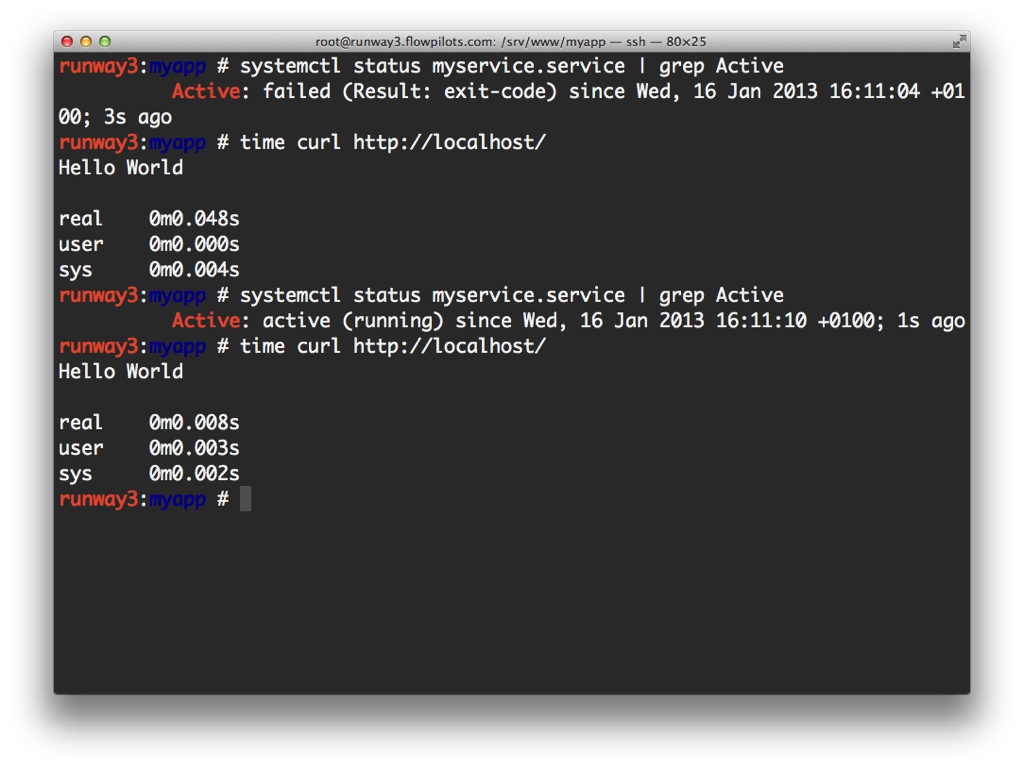

Stop the previously-running service and start the socket instead. We can use status to verify that the socket is listening and the service is not running.

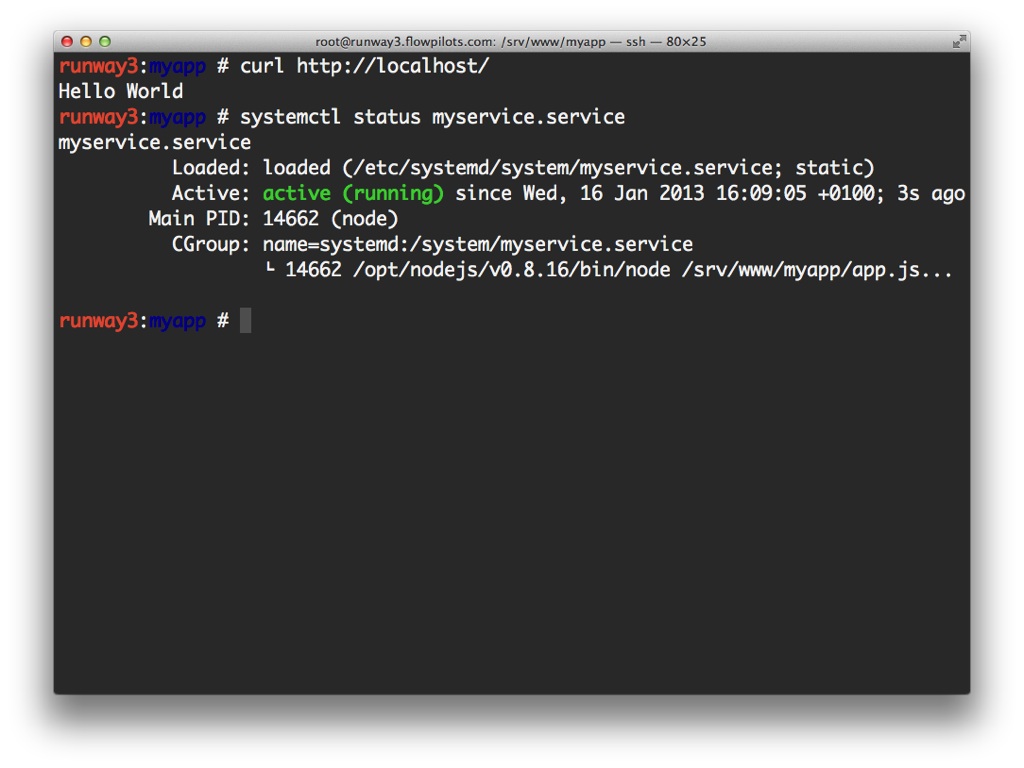

When a call comes in, we’ll see that the service gets started:

Again: all transparently, the user will not notice it.

After a while, the service will shut down again automatically.

Monitoring

Systemd can do lots of other things. One of the nicer ones is the built-in logging facility, which we use for monitoring.

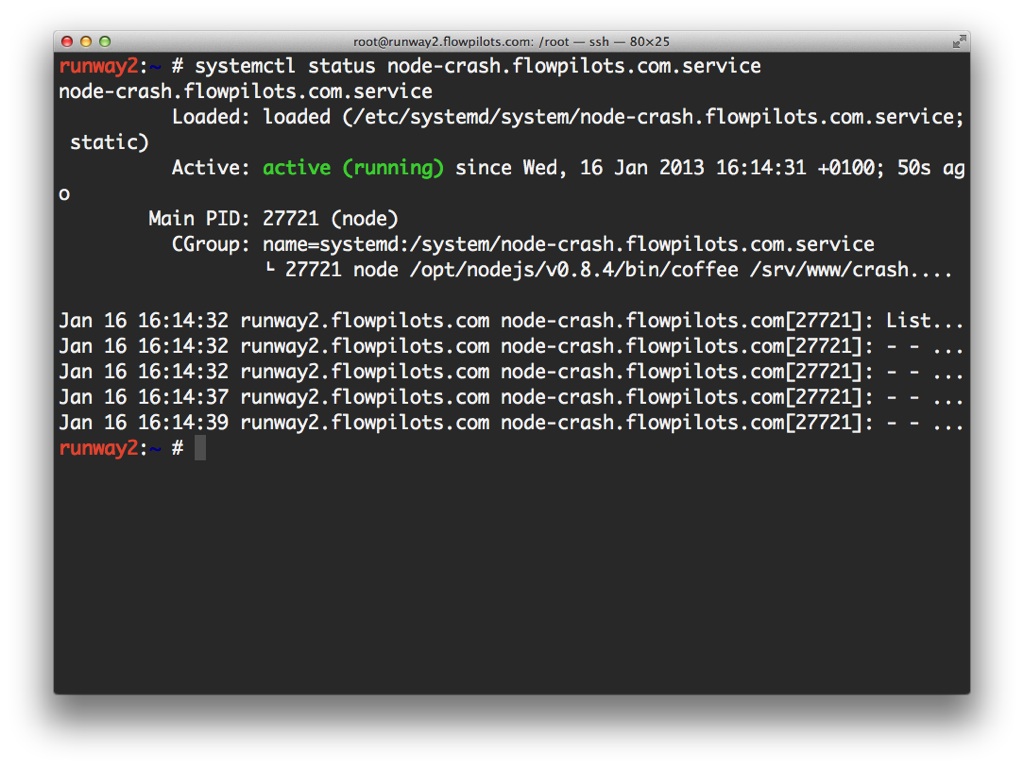

Here’s the output of a status command. There’s a lot of information in this screen, but for now we care about two things:

- At the bottom you can see log output. Any output that Node.js generates is captured by systemd. You can even use a -f parameter and follow the log messages when they come in.

- Slightly higher, there’s a CGroup listing. Here’s where it gets interesting!

Systemd exposes a lot of advanced functionality from the Linux kernel. One of them are CGroups. This is a grouping of processes. Each service gets it’s own CGroup under systemd and if the service spawns child processes, they’ll end up in the same CGroup as well.

This means all output from spawned child processes will end up in the logging output of the service as well. That way you don’t miss anything.

All of this is possible thanks to the journal. This is a modern version of the syslog. Rather than logging to flat files, it has a structured data store, which lets you attach more meta-data to your logging.

Consider the implications of this:

- You can attach app-specific meta-data to each log message, such as the user ID of the currently active user.

- All logging from early kernel boot to the highest app level uses this functionality, so you can see anything that happens in the wider context of the system.

- It’s structured, so you can do advanced queries on it. It even supports JSON output out of the box!

And as an extra: it supports network aggregation, so you can combine and correlate all logs from a cluster of machines. That way you could even set things up such that you can trace users through app tiers.

Adding this to your Node.js app is simple. Just install the journald module and grab a reference to a Log object. Use the log method to write out log messages, with a dictionary of meta-data as the parameter.

More fun stuff

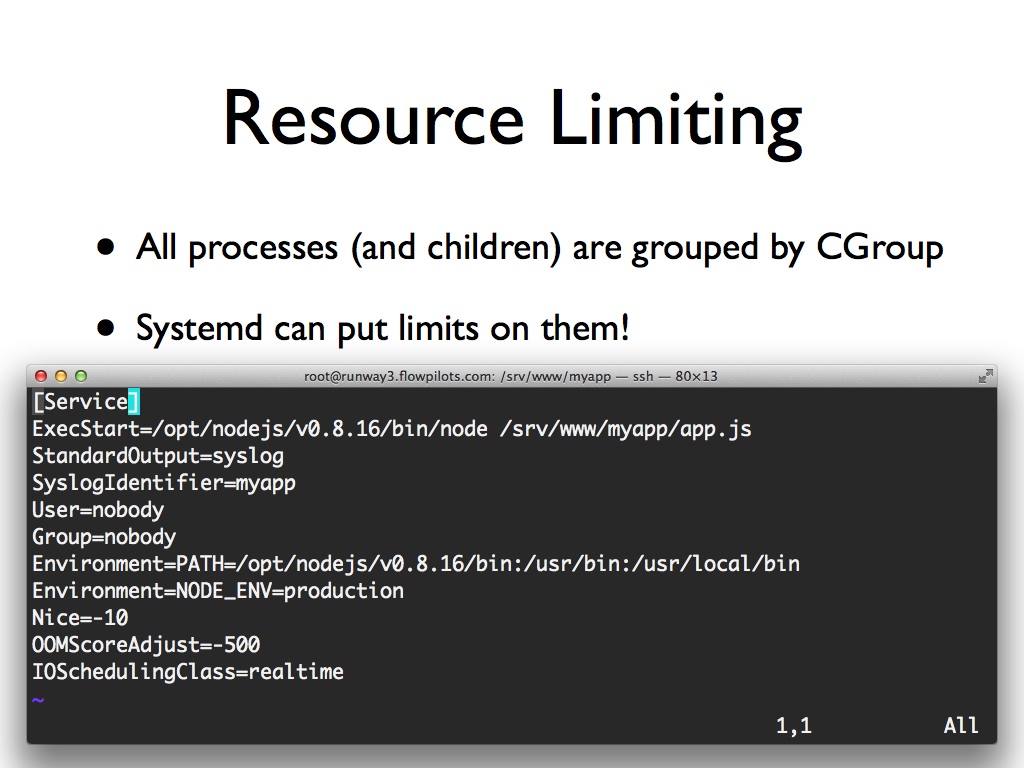

The CGroup mechanism can also be used for resource limiting. In the example above, I raised the CPU priority, adjusted the Out-Of-Memory score to prevent the service from being killed when running out of memory and I gave it top IO priority. In other words: I made it an extremely mission critical service.

You can use this in the other direction as well: configure block IO limits to prevent any single process from using up all of the resources on your server. That’s a great thing to have when you run lots of apps, especially on a shared system where you don’t trust them all.

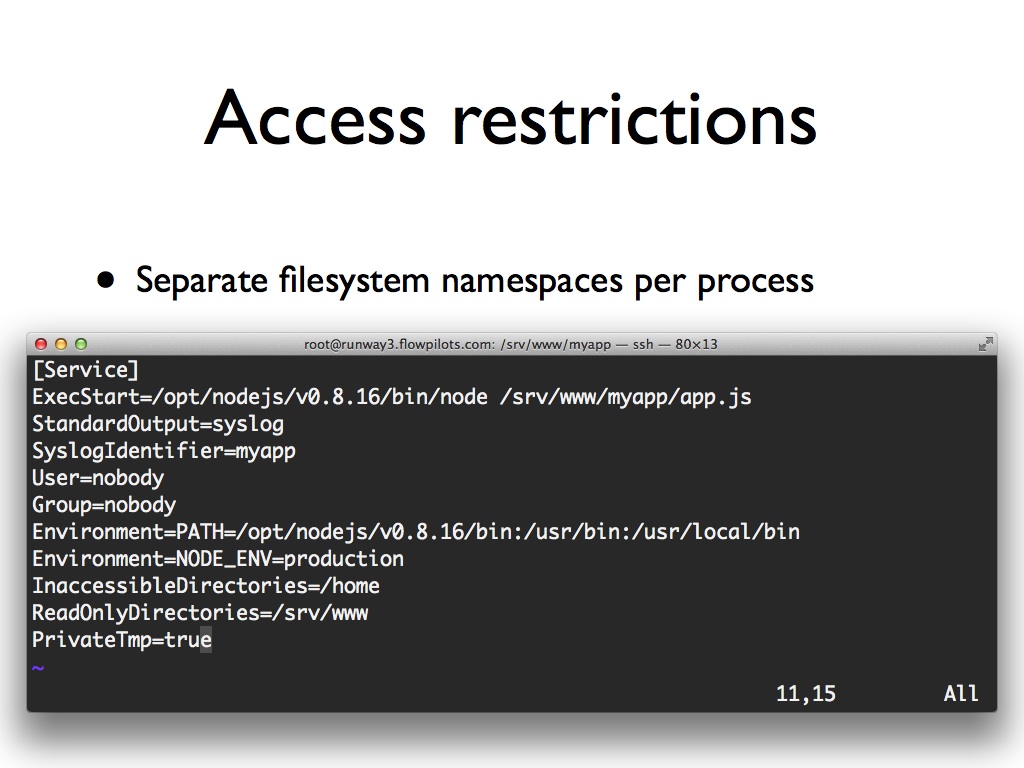

Systemd can set up a virtual filesystem for each service and impose some extra access restrictions. Here we prevent access to /home and we make /srv/www read-only, even for root. We also create a private /tmp folder, to prevent information leakage between applications.

The future

We now have automatically activating node.js services, complete with advanced monitoring and resource policies. That’s great. You could build a very nice system for hosting lots of applications with that.

But it’s not flawless. Each application still lives on the same system and sometimes you want even more separation.

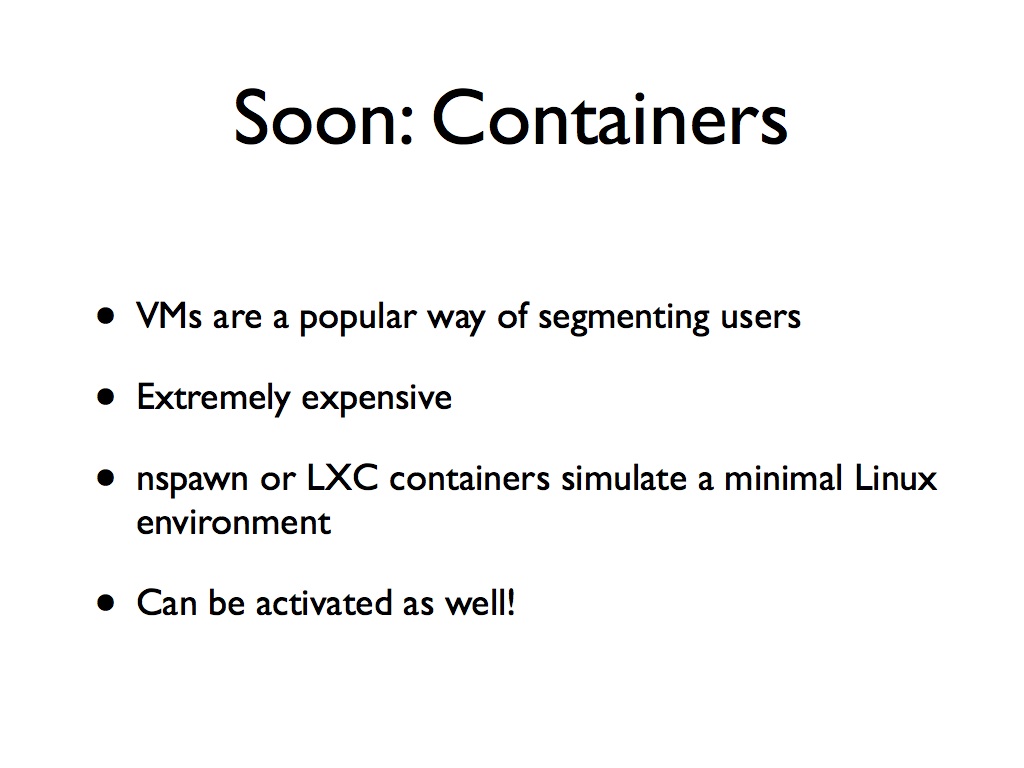

Virtual machines are a very popular way of segmenting your users. Each one lives in it’s own virtual world an cannot see the others. Unfortunately this comes at a very high cost in terms of memory and some CPU overhead.

Systemd is offering an alternative with systemd-nspawn or LXC containers. These are very minimal (but fully-functional) Linux environments. For the app it will seem as if it is on its own machine, yet it’s a lot more lightweight.

The best part about this: soon you’ll be able to use socket activation across containers. Systemd will be able to spawn containers when on-demand.

Wrap-up

We’ve seen that with very little effort, you can have a very modern deployment environment for Node.js. Give it a go!

Resources

- Systemd website (read the 20 parts of systemd for system administators, they’re worth it, this talk is the tip of the iceberg)

- node-systemd

- node-autoquit

- journald bindings

Backup slides

This is extremely unscientific, but it shows that there’s minimal overhead to starting Node.js. For reference: 40 milliseconds of overhead is shorter than the time it takes to do a DNS lookup.